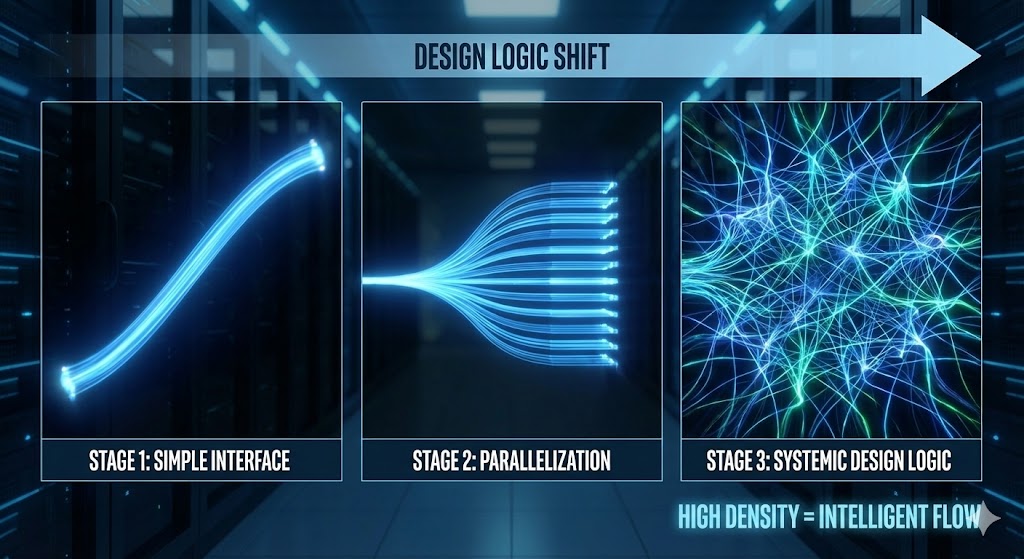

In the evolution of data center networks, changes in connector types are often seen as simple interface upgrades.

In reality, the transition from LC to MPO and now to MMC (a VSFF connector) reflects a deeper shift in system design logic, not just connector size.

This shift is driven by one key question:

As bandwidth keeps growing, what problem is “high density” really solving in data centers?

During the 10G, 25G, and early 40G periods, LC connectors were the standard choice in data centers.

Their design logic was straightforward:

One or two fibers per link

Clear one-to-one mapping between ports and transceivers

Simple installation and maintenance

Designed for networks with limited port counts and moderate speeds

At this stage, density mainly meant:

How many LC ports could fit on a switch front panel

How much bandwidth a single rack could support

Connectivity was simple, and fiber management was not yet a major concern.

As 100G and 400G networks became mainstream, network architecture changed:

Parallel optics replaced single-lane transmission

A single port now carried 8, 12, or even 16 fibers

Bandwidth growth depended more on parallel lanes than higher speeds

MPO connectors enabled this shift, but they also introduced new challenges.

High density was no longer just about ports—it became about:

Total fiber count per rack

Cable size and routing complexity

Polarity, mapping, and planning accuracy

In large-scale deployments, MPO-based systems revealed several limits:

Bulky fiber bundles reduce airflow and make routing difficult

Highly coupled design logic, where small errors affect many links

High operational complexity, especially during moves, adds, and changes

At this point, density stopped being a pure hardware issue.

It became a system-level management problem.

MMC was not designed simply to be a smaller MPO.

Its goal is different: increase usable density while keeping systems manageable.

Key changes in design logic include:

High density now means:

More independent and clearly defined ports

Better port-per-area efficiency

Less reliance on thick, high-fiber cables

MMC allows parallel optics to be broken into smaller, more controlled units:

Easier planning and labeling

Clearer network topology

Better flexibility for future upgrades

Modern data centers—especially those for AI, CPO, and optical switching—require:

Shorter internal links

Better airflow and thermal design

Front-to-back, modular layouts

MMC fits these system-level needs better than traditional multi-fiber connectors.

When viewed at the system level, high density addresses three core challenges:

Scaling bandwidth in limited physical space

Keeping complex systems serviceable and flexible

Controlling long-term operational cost and risk

From this perspective:

LC focused on simplicity

MPO focused on parallel transmission

MMC focuses on system clarity and long-term operation

The move from LC to MPO to MMC shows that data center connectivity is no longer just about fitting more fibers into smaller spaces.

The real challenge is designing networks that are:

Clear to plan

Easy to maintain

Ready to scale

High density is not the final goal.

A well-managed, future-ready system is.

If you want to know more about us, you can fill out the form to contact us and we will answer your questions at any time.

We use cookies to improve your experience on our site. By using our site, you consent to cookies.

Manage your cookie preferences below:

Essential cookies enable basic functions and are necessary for the proper function of the website.

These cookies are needed for adding comments on this website.

These cookies are used for managing login functionality on this website.

Statistics cookies collect information anonymously. This information helps us understand how visitors use our website.

Google Analytics is a powerful tool that tracks and analyzes website traffic for informed marketing decisions.

Service URL: policies.google.com (opens in a new window)

Clarity is a web analytics service that tracks and reports website traffic.

Service URL: clarity.microsoft.com (opens in a new window)

You can find more information in our Cookie Policy and Privacy Policy for ADTEK.

One Response

The evolution of WDMs and optical cabling is truly fascinating. As we move to 400G and beyond, I can see how important it is to rethink how we design data centers to manage the growing demand for bandwidth efficiently.