AI applications – especially large language models and deep learning – are dramatically increasing data center demands for compute, storage, and networking. Analysts estimate that global data creation will double over the next five years, and AI alone is expected to drive data center storage from ~10.1 ZB in 2023 to ~21.0 ZB by 2027 (≈18.5% CAGR)[1]. At the same time, AI-ready compute capacity is projected to grow roughly 33% per year (2023–2030) on a mid-range forecast, such that ~70% of new data center capacity by 2030 must be “AI-capable”[2]. High-performance GPUs and accelerators dominate this surge: modern AI servers can draw hundreds of watts per chip, yielding racks that consume 60–120+ kW each[3]. For example, McKinsey notes that average rack power densities have more than doubled (from 8 to 17 kW) in just two years, with top racks (hosting GPUs for models like GPT) exceeding 80 kW[4] This explosive growth in computing power is outpacing traditional data center provisioning. Colocation and cloud providers are expanding existing data halls (a 30 MW facility was “large” a decade ago, whereas a 200 MW campus is now normal)[5], but even so new capacity is leased out years in advance and near-zero vacancy rates are reported in primary markets[6].

The networking and storage demands of AI workloads also surge. Training large models and serving vast datasets generate unprecedented east–west traffic within data centers, requiring high-bandwidth, low-latency fabrics. Some industry reports estimate that AI-optimized data centers may need 2–4× more fiber cabling than conventional hyperscale sites[7]. In practice, this means thousands of fiber strands per site – far beyond traditional cable counts[8] – as well as dozens to hundreds of high-speed switches interconnected by dense fiber arrays. At the same time, latency requirements differ by use case: model training is batch-oriented (so many training centers locate in remote regions with abundant power) whereas real-time inference demands colocating servers near users[9]. These trends imply a hybrid topology of massive core AI clusters (possibly in specialized zones) connected via high-bandwidth links to edge or metro data centers for inference.

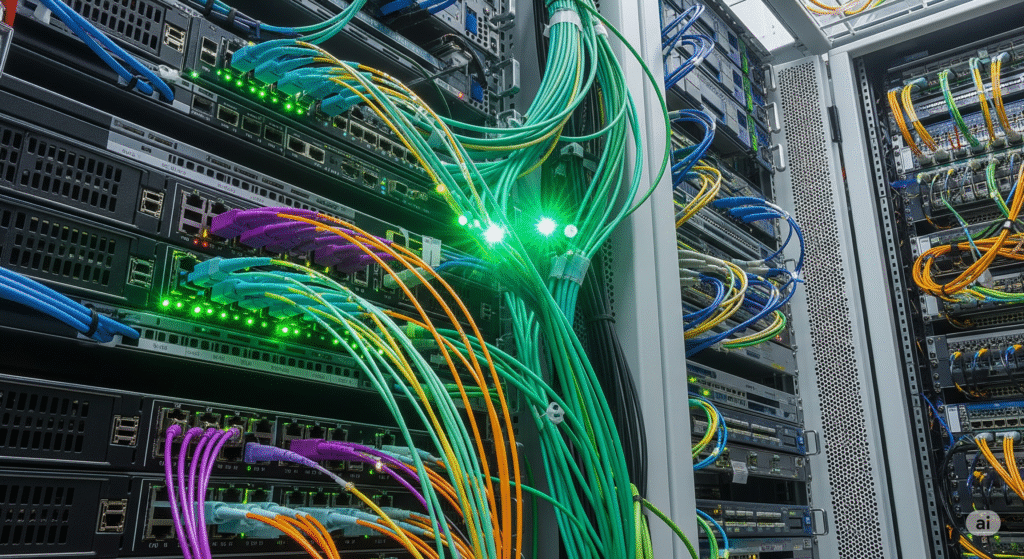

Meeting AI’s networking load strains existing data-center cabling systems. The sheer fiber count required in AI clusters has “gone from hundreds of fiber cables to thousands and tens of thousands in a very short period”[10]. However, physical pathway space (cable trays, conduits) has not scaled accordingly, creating a bottleneck: “The challenge lies in managing this increasing fiber demand within the physical constraints of the data center infrastructure”[11]. Compact cabling technologies help address this, but operational complexity rises. For example, new very-small-form-factor connectors (e.g. VSFF MTP/MPO) pack 3× the fiber into the same panel space[12], and pre-terminated fiber trunks speed deployment[13], but cable managers must track tens of thousands of strands. The result is denser, more intricate cabling layouts requiring careful planning (bend-radius control, patching, labeling) to maintain reliability and airflow.

Fiber density is just one dimension of interconnect scaling. AI clusters also increase switch/rack counts and board-to-board links. Switch ports must support 400G–800G speeds (and soon beyond), driving demand for active optical cables (AOCs) and direct optics over fiber. Vendors are developing “router-on-fiber” and photonic solutions to compress multiple lanes, but such high-density gear tends to increase cost and power for the switching plane. All these factors pressure designers to maximize rack port density, often by stacking transceivers and fiber trunks in tall cabinets.

Finally, fiber management is tied to latency requirements: training workloads can tolerate longer WAN latencies, but interactive inference or distributed training (model parallelism) must keep intra-cluster latency very low. This has led to localizing GPU clusters and using high-speed fabrics (InfiniBand or Ethernet with RoCE) internally, further emphasizing the need for dense, low-loss fiber interconnects within AI data halls.

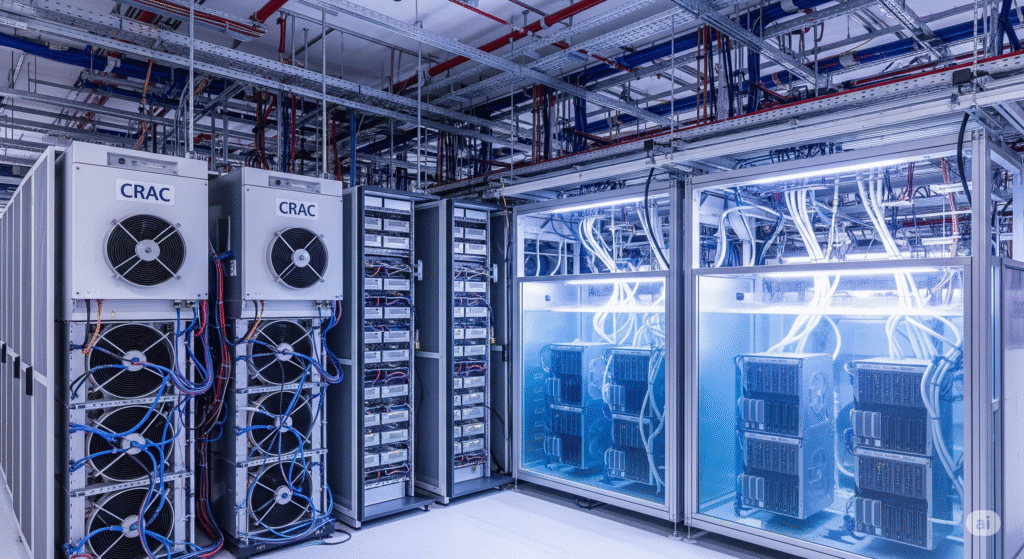

AI servers and GPU accelerators generate enormous heat. Conventional air-based cooling (CRAC units and hot/cold aisles) is typically effective only up to ~40–50 kW per rack[14]. Beyond that, the thermal load overwhelms airflow and causes hot spots. For example, McKinsey notes that a 50 kW/rack ceiling – adequate for moderate workloads – “might be adequate for AI inferencing workloads… but not for [training]”[15]. In practice, many AI training racks now operate at 60–100+ kW, far above this limit[16]. Uptime Institute data confirm this trend: although typical racks remain under ~8 kW, “a few” facilities report racks up to 100 kW[17], underscoring how cutting-edge AI deployments push heat loads toward the extreme.

Consequently, data centers are rapidly adopting liquid-cooling techniques. Direct-to-chip (DTC) liquid cold plates and immersion cooling remove heat at its source much more effectively than air. Immersion units (like those from Asperitas and others) enclose server components in dielectric fluid, capturing 100% of the heat with minimal airflow. Rear-door heat exchangers and in-rack cold plates now routinely enable cooling of 60–120+ kW per rack[18]. In fact, industry experts observe that “data centers must be designed and optimized specifically for AI workloads – with liquid cooling being the first iteration of this new generation of modern cooling solutions”[19]. Early adopters report that these liquid methods can dramatically improve power usage effectiveness (PUE) and reduce energy spent on cooling. (For instance, some sites have seen ~10% PUE gains switching from air to liquid cooling[20].) Embedding liquid directly at the rack simplifies heat rejection and allows higher densities without impractically cold air.

By contrast, traditional CRAC (air-conditioning) units have limited scalability. In a legacy system (as shown) chilled air circulates below raised floors or through plenum ducts. However, as rack heat loads climb into tens of kilowatts, dozens of CRAC units would be required (driving up space and fan power). Operators are finding that even with improved airflow containment, physics limits are reached: a recent survey noted that while average racks are ~7 kW, some respondents now have racks up to 50–100 kW[21], which traditional HVAC cannot handle. Thus, many data halls are being retrofitted with rack-level cooling (cold-aisle containment, rear-door exchangers, or liquid loops) rather than relying solely on air handlers.

AI workloads also skyrocket electricity demand. McKinsey projects that U.S. data center power draw will reach ~600 TWh by 2030 (≈11.7% of U.S. electricity) under a mid-range growth scenario[22]. Globally, the IEA estimates that DC energy use will more than double by 2030 (to ~945 TWh) due to AI[23] – roughly matching Japan’s current total consumption. In real terms, industry forecasts (e.g. Goldman Sachs) predict a 50% increase in data center power demand by 2027 and up to +165% by 2030, driven largely by AI development. Even BCG expects worldwide data-center power demand to grow at ~16% CAGR from 2023–2028, reaching ~130 GW by 2028[24].

This power surge strains the utility and on-site infrastructure. Many existing grids were not designed for contiguous multi-megawatt loads in suburban data-center parks. In some regions (e.g. Northern Virginia or Dublin), utilities have paused new hookups as transmission upgrades lag behind demand[25]. By 2030, data centers could use ~28% of Ireland’s grid power, prompting moratoria on new sites[26]. Operators must therefore work closely with utilities and regulators to secure higher-capacity feeders, local generation (renewables, fuel cells, small modular reactors), and demand-response strategies. McKinsey notes that some AI data centers are even colocating next to power plants or adding behind-the-meter generation (fuel cells, solar, batteries) to mitigate grid limits[27].

Within the data center, the electrical distribution systems are also being rethought. High power densities mean larger switchgear, step-up transformers, and bus ducts are required to feed each hall. At rack level, some hyperscalers are exploring 48-volt DC architectures (instead of traditional 12V DC supply) to reduce conversion losses[28]. UPS and PDU capacity must also grow: a single high-density hall might need tens of MW of UPS power. Interestingly, because AI training jobs are batchable and less “mission-critical” than enterprise 24×7 services, operators are sometimes choosing fewer redundancy tiers for training clusters (reducing N+1 generators or UPS size) to save cost, accepting that a brief outage could simply restart training jobs. But overall, the combination of enormous power draw and sustainability goals is forcing data centers to push on energy efficiency (very low PUE), source renewables, and even participate in grid-scale demand-response programs.

Finally, AI acceleration raises facility floor-space challenges. High-density racks occupy the same footprint but require more power and cooling per square foot. Many colocation sites have standard rack rows, but AI hardware forces thicker cable bundles and extra cooling gear, effectively using more room per rack. As one industry expert noted, “we’re now asking data center operators to move from supporting 6–12 kW per rack to 40, 50, 60, and even more kW per rack”[29]. To accommodate this, new data halls often feature higher floor-loading capacity (to support heavy cabinets) and taller ceilings for heat exhaust.

Pathway and floor space are also reallocated. New cable trays and containment take volume; CRAC units or in-row heat exchangers consume aisles. As noted earlier, fiber bundle density strains under-floor plenum space. Some providers combat this by using overhead cabling trays or specialized containment housings. Moreover, security and access designs are adapting: AI racks are highly trusted (private clusters) but may need additional fire suppression or intrusion protection due to their value.

In summary, the shift to AI workloads is pushing data-center infrastructure to extremes of density. Network interconnects must be far more fiber-dense and low-latency[30]. Cooling systems must evolve from air to liquid at rack scale[31]. Electrical systems must supply tens of MW per building[32]. In each case, operators face the physical limits of existing designs. Meeting these challenges will require forward-looking planning (site power agreements, modular expansion, advanced cabling standards) and continued innovation in power/cooling density.

Sources:

If you want to know more about us, you can fill out the form to contact us and we will answer your questions at any time.

We use cookies to improve your experience on our site. By using our site, you consent to cookies.

Manage your cookie preferences below:

Essential cookies enable basic functions and are necessary for the proper function of the website.

These cookies are needed for adding comments on this website.

These cookies are used for managing login functionality on this website.

Statistics cookies collect information anonymously. This information helps us understand how visitors use our website.

Google Analytics is a powerful tool that tracks and analyzes website traffic for informed marketing decisions.

Service URL: policies.google.com (opens in a new window)

Clarity is a web analytics service that tracks and reports website traffic.

Service URL: clarity.microsoft.com (opens in a new window)

You can find more information in our Cookie Policy and Privacy Policy for ADTEK.

One Response

I used to be very happy to search out this net-site.I wanted to thanks on your time for this excellent learn!! I definitely having fun with every little bit of it and I’ve you bookmarked to check out new stuff you blog post.